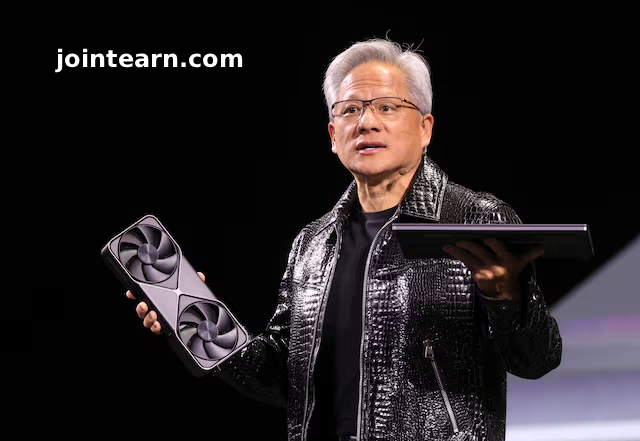

Nvidia CEO Jensen Huang revealed on Monday at the Consumer Electronics Show (CES) 2026 that the company’s next-generation AI chips are now in full production, promising up to five times the computing power of previous chips for artificial intelligence (AI) applications such as chatbots and other AI-driven services.

Vera Rubin Platform: A Leap in AI Computing

Huang provided new details about the Vera Rubin platform, which will debut later this year. The platform consists of six separate Nvidia chips, with flagship servers containing 72 graphics processing units (GPUs) and 36 next-generation central processors (CPUs).

The Vera Rubin system is designed to scale in “pods” of over 1,000 chips, allowing AI companies to accelerate the generation of “tokens”, the fundamental units in AI computation, by 10 times compared to current hardware.

Huang emphasized that the performance gains are not solely due to more transistors—the new chips have only 1.6 times more transistors than their predecessors—but rely on a proprietary type of data processing that Nvidia hopes the industry will adopt widely.

Enhancements for AI Applications

The new chips include context memory storage, which improves chatbot responsiveness for long questions and conversations. Huang also highlighted advancements in networking with co-packaged optics, enabling thousands of machines to work together efficiently. These networking upgrades compete with existing solutions from Broadcom and Cisco Systems.

Nvidia confirmed that early adopters of the Vera Rubin systems will include CoreWeave, with major cloud and tech companies such as Microsoft, Oracle, Amazon, and Alphabet expected to deploy the technology later this year.

AI Software and Autonomous Driving

In addition to hardware, Nvidia showcased Alpamayo, new AI software aimed at self-driving vehicles. The platform not only helps autonomous cars make safer decisions but also records data and decision paths for engineers to evaluate later. Huang announced that both the models and training data for Alpamayo will be open-sourced to improve transparency and trust in AI development.

Competition and Strategic Moves

Despite Nvidia’s market leadership in AI chips, the company faces growing competition from traditional rivals like AMD and from tech giants such as Google, which is designing its own AI chips in collaboration with partners like Meta Platforms.

Huang also addressed Nvidia’s recent acquisition of talent and chip technology from startup Groq, noting that while it does not affect Nvidia’s core business, it may lead to expanded product offerings in the future.

Global Demand and Geopolitical Considerations

The CEO highlighted that Nvidia’s previous H200 chips remain in high demand, particularly in China. While U.S. export controls allow the shipment of H200 chips, Nvidia is awaiting approvals for new shipments amid heightened scrutiny of technology exports. CFO Colette Kress confirmed that licensing applications are in process with U.S. and other government authorities.

Huang stressed that the Blackwell and Vera Rubin platforms are designed to provide AI customers with significantly greater efficiency and performance, ensuring Nvidia maintains its lead in the competitive AI hardware market.

Looking Ahead

The CES 2026 announcements reinforce Nvidia’s strategy of combining advanced chip hardware, AI software, and networking solutions to serve both cloud-based AI companies and enterprise clients. Analysts note that the Vera Rubin platform positions Nvidia to extend its dominance in AI computing while enabling customers to handle larger models and more complex workloads efficiently.

Leave a Reply